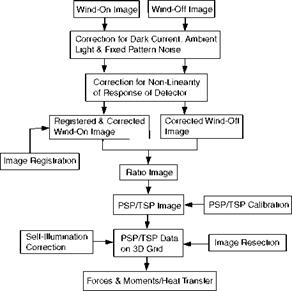

Optimization Method

In order to develop a simple and robust method for comprehensive camera calibration, the singularity problem must be dealt with to solve the collinearity equations. Liu et al. (2000) proposed an optimization method based on the following insight. Strong correlation between the interior and exterior orientation parameters leads to the singularity of the normal-equation-matrix in least-squares estimation for a complete set of the camera parameters. Therefore, to eliminate the singularity, least-squares estimation is used for the exterior orientation parameters only, while the interior orientation and lens distortion parameters are calculated separately using an optimization scheme. This optimization method contains two separate, but interacting procedures: resection for the exterior orientation parameters and optimization for the interior orientation and lens distortion parameters.

When the image coordinates (x, y) are given in pixels, we express the collinearity equations Eq. (5.1) as

topology, leading to faster convergence. The topological structure of std( xp )

or std( yp ) can also be affected by random disturbances on the targets. Larger

noise in images leads to a slower convergence rate and produces a larger error in optimization computations. Although the simple ‘valley’ topological structure allows convergence of optimization computation over a considerable range of the initial values, appropriate initial values are still required to obtain a converged solution. The DLT can provide such initial values for the exterior orientation parameters (a>,у, к,Xc, Yc, Zc) and the principal distance c. Combined with the DLT, the optimization method allows rapid and comprehensive automatic camera calibration to obtain a total of 14 camera parameters from a single image without requiring a guess of the initial values.

|

Fig. 5.2. Step target plate for camera calibration |

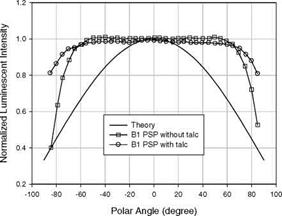

The optimization method was used for calibrating a Hitachi CCD camera with a Sony zoom lens (12.5 to 75 mm focal length) and an 8 mm Cosmicar television. As shown in Fig. 5.2, a three-step target plate with a 2-in step height provided a 3D target field for camera calibration, on which 54 circular retro-reflective targets of a 0.5-in diameter spaced out 2 inches apart are placed. Figure 5.3 shows the principal distance given by the optimization method versus zoom setting for the Sony zoom lens. Figures 5.4 and 5.5 show, respectively, the principal-point location and radial distortion coefficient K as a function of the principal distance for the Sony zoom lens. The results given by the optimization method are in reasonable agreement with measurements for the same lens using optical equipment in laboratory (Burner 1995). The optimization method was also used to calibrate the same Hitachi CCD camera with an 8 mm Cosmicar television lens. Table 5.1 lists the calibration results given by the optimization method compared well with those obtained using optical equipment.

In order to determine accurately the interior orientation parameters, a target field should fill up an image for camera calibration. In large wind tunnels, however, a camera is often located far from a model such that the target field looks small in the image plane. In this case, a two-step approach is suggested that determines the interior and exterior orientation parameters separately. First, placing a target plate near a camera to produce a sufficiently large target field in the image plane, we can determine accurately the interior orientation parameters

|

using the optimization method. Next, assuming that the determined interior orientation parameters are fixed for locked camera setting, we obtain the exterior orientation parameters using a resection scheme from the target field in a given wind-tunnel coordinate system.

Fig. 5.4. Principal-point location as a function of the principal distance for a Sony zoom lens connected to a Hitachi camera. From Liu et al. (2000)

Fig. 5.5. The radial distortion coefficient as a function of the principal distance for a Sony zoom lens connected to a Hitachi camera. From Liu et al. (2000)

|

Table 5.1. Calibration for Hitachi CCD camera with 8 mm Cosmicar TV lens

|